When I started to play with AI tools for coding, I had a very simple fantasy in my head. I thought I would open my editor, connect a smart agent, and it would just understand my project, my style, my habits. It would read the whole codebase, follow my taste, and help me build features almost like a real teammate.

Reality was not like that.

Most of the time it felt like sitting with a very fast junior developer who forgot everything every five minutes. I had to repeat how I name things, how I organize folders, what patterns I like, what I hate. I had to review every file anyway. Sometimes I ended more tired with the agent than without it.

This feeling reminded me of something from my early years in development. Around 2016 I learned about Test Driven Development. I liked the theory: first you write tests that say what the code should do, then you write the actual code. The idea is clean and strict. But in real life I never went full "tests first" every day. For me, writing software is a creative process. I think about structure and patterns before I start, but often I just open a file and begin to code. The shape of the solution changes as I build it. Writing tests first can help to make better structure, but for me it also slows down that first creative part.

Later I worked in a company with a complex product, and there I saw another face of this problem. We had user stories with personas and acceptance criteria and all the classic stuff. But many times the stories were not really precise. The product owner had one picture in mind, the story said something a bit different, I built my own interpretation, QA tested against their own version. We needed several loops of "this is not what we meant" before everyone was happy. I learned one hard lesson: if people are not aligned on what "done" means, you pay for it with time and frustration.

Now move to today with AI agents. At the beginning I used them like a smarter autocomplete. I dropped a long message with half of the context of my problem, a few files, some rules, then I asked for a feature. Sometimes the result was ok. Many times it looked good on the surface but was wrong in details. Wrong edge cases, wrong assumptions about how things work in my system, wrong style. It felt like those bad user stories again. The agent and I did not share the same idea of what we were doing.

After many of these attempts I had two simple but important realizations.

The first one is about memory. The agent does not remember my life. It does not know my old tickets or previous chats unless I paste them again. It only sees the text I give it in this call. That "window" is its whole world. If I fill it with noise - long logs, old messages, giant blobs of code - then the key information is hidden. When I give it a small, clean description, plus just the files that really matter, the answers are much better. Same model, but the behaviour changes a lot depending on what I feed it.

The second realization is about long conversations. When I stayed in one chat for too long, the whole thing turned into a soup. Early decisions, later fixes, false starts, half ideas, all mixed together. I kept correcting the agent inside that same long thread. "No, not like that." "Wait, do it this other way." After many messages it felt like both of us had lost the main story. If I was the model reading that history, I would also be confused. The "next step" that matches that messy history is just more random changes and more corrections. No wonder the quality drops.

Because of this, I started to change my way of working. I wanted something that still lets me be creative, but also gives the agent a fair chance to help. What I found for myself is a pattern that is very simple in words: first understand, then outline, then build. It is not a strict method, but more like a small mental rule that stops me from jumping too fast into "please write code".

When I say "understand", I mean that before the agent touches anything, it needs to study the code and explain it back to me. For example, imagine I want to add a new login flow, like magic link sign in, in an old project. In the past I would tell the agent directly "add magic link login" and hope it finds the right files. Now I do it differently. I ask the agent to read the current login code and write a short note for me. In that note I want to see where we handle credentials, how sessions are created, how emails are sent, what looks strange or special. I tell it clearly not to change any code, only to observe and report.

When I get this note back, I can read it in a couple of minutes. If something is missing or wrong, I correct it. Maybe there is an old module we actually do not use anymore, or a path that is critical and must not break. After this, I have a compact view of the current situation, and the agent has just processed the same information. We are looking at the same small map, not at a jungle of files.

The next step for me is the "outline". Based on that map, I ask the agent to describe how we should approach the change. I want a text that sounds almost like a small plan for a teammate. Which parts of the system need to change, what new behaviour we add, which old behaviour must stay as it is, how we will test, which risks we see. I still do not give permission to edit the code here. I first read the outline. This is where I catch the big mistakes. Maybe the agent wants to move login logic into a new place that does not fit the rest of the architecture. Maybe it forgets some important validation. Fixing these things in the outline is very cheap compared to cleaning a half implemented wrong solution.

When the outline feels right, I finally move to the "build" part. Now I tell the agent to follow the outline and actually change the files. I ask it to do this in small chunks, show me the changes, run tests where possible, and adjust when something fails. My review is easier now. I do not need to evaluate the whole concept again, only to check if the concrete changes match the outline and keep the code quality I want. At the end I always ask for a short summary file. In that summary I want to know what changed, where, and how to verify the behaviour. This is mainly for my future self. When I open the project after a month, I can read that small file and quickly remember why some code looks different.

There is also the problem of long sessions. Even with this flow, some tasks take many steps and many messages. Test output, lint warnings, extra fixes, different branches of the outline. At some point I can feel that the conversation is getting heavy and the agent is not as sharp. The important facts are there, but buried under noise. When this happens now, I do something like a checkpoint. I ask the agent to stop editing and write a clean state file that explains where we are. Finished parts, things still open, important design decisions, and any tricky parts of the code. After that, I close the chat and open a new one. In the new chat I only paste the original goal and that state file. It feels like loading a saved game without all the junk logs. The agent sees a simple, focused picture again and the quality jumps back up.

Parallel to this, I also started to collect my own rules in small "guides" instead of one giant prompt. A guide for me is just a small document or folder that describes how I like to handle a specific type of work. For example, I might have a refactoring guide where I write down my rules for safe refactors, some examples of good and bad changes, and maybe a quick checklist. I might have a database change guide that explains how we deal with migrations, backups, and rollbacks. I might have a writing guide for documentation with my preferred tone and structure.

These guides live in my project, usually as simple markdown files next to the code or in a dedicated folder. When I ask the agent to do a task of that type, I point it to the relevant guide. Instead of trying to encode all my style in every prompt, I just say "follow the refactor guide in this path" or "follow the docs guide here". The agent can read that file at the start of the task and then work with that frame in mind. The big advantage is that I can improve these guides slowly. If I see a mistake repeat, I add a new line to the guide. If my taste changes, I update the guide. It is versioned with the rest of the code, and I can share it with other people in the team so we all speak the same language.

Of course I do not need this full ritual for every single change. For tiny things like changing a label, fixing a simple bug, or formatting some code, I still just ask the agent directly and scan the diff. There is no point in writing an outline for a one line fix. For medium things, like adding a new field in a form, sometimes I skip the research step and write a short outline myself, then let the agent implement it. For bigger and risky things, I use the full flow with understand, outline, build, plus guides and checkpoints. The amount of structure grows together with the size and danger of the change.

One extra habit that I enjoy is using the agent at the end of a feature to improve the guides and the process. When a task is complete, tests pass, and I am satisfied with the result, I often ask the agent to look over the journey. It can compare the original goal, the outline, and the final code. Then I ask questions like "where did we waste time", "what was unclear in the description", "which mistake appeared more than once". The answers are not always perfect, but they often point to small improvements I can make. Maybe I realize I forgot to mention logging rules in the outline. Maybe we discovered a common edge case that deserves a note in the relevant guide. I then change my templates and guides a little. Over time, this makes the system more solid.

There is one danger in all this. As I move more structure into specs, outlines, and guides, I also move more power there. A bad line of code is just a bad line that I can fix. A bad phrase in a guide or a wrong detail in the outline can create many bad lines of code. So I try to keep my brain on those higher level texts. I let the agent do the heavy lifting of reading and writing code, but I do not blindly trust it to define the rules. I read what it proposes. I choose what becomes part of my permanent guides.

Because of this experience, when people ask me how to get more value from agents in development, I usually do not start talking about which model is slightly better this month. I think models matter, but for daily work the bigger change for me came from this way of working. I treat the agent like a very fast helper with zero long term memory. I am responsible for the context I give it, the clarity of the spec, and the quality of the guides. It turns out that if I do my part there, most strong models can give me decent results. If I give bad input, even the best model will just create nice looking mistakes.

So where does this leave me with all the new tools and models that appear every week? I still like to try them, but I am not chasing each one as a new religion. I have my basic flow: understand, outline, build, plus my growing set of guides and a habit of making checkpoints when things get noisy. A new model is just a different engine under the same car. If it runs better, nice. If not, I can switch back. The important part is that I finally feel I have a way to use agents that supports my creative style instead of fighting it.

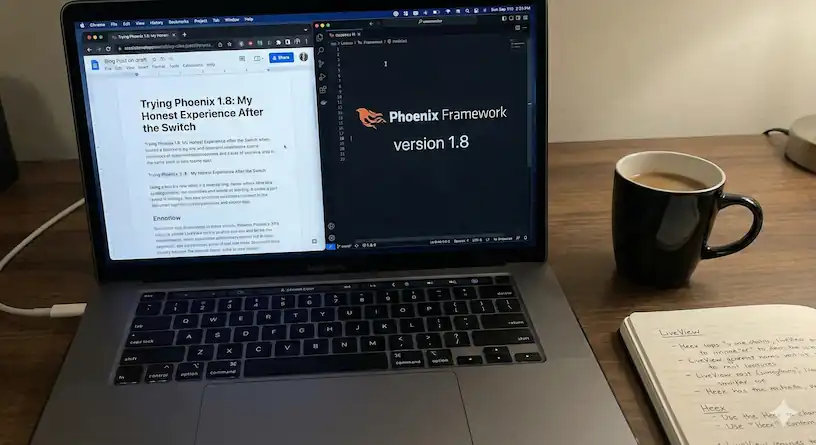

Windsurf

All my projects and even this website is build using Windsurf Editor. Windsurf is the most intuitive AI coding experience, built to keep you and your team in flow.

Get in Touch

If you need a developer who delivers fast, reliable, real-world solutions, reach out. Let’s turn your idea or project into something that works.