I didn’t set out to build a blogging platform.

It started with a coffee and a mildly depressing screen share.

A friend who does SEO marketing was proudly walking me through his setup. Dozens of small WordPress sites, all slightly different, all pointing to the same funnels. Same theme, same structure, same half-broken plugins. Pages took forever to load, URLs were a mess, multi-language was duct-taped together with plugins, and tracking scripts were scattered everywhere like confetti.

Yet it worked. The leads were coming in, and the money too.

Watching that, I had two reactions at the same time: respect for the hustle, and irritation as an engineer. The business model made sense. The infrastructure sucked. I kept thinking: if this stack can make money in that condition, what would happen if I built the same idea properly?

That question refused to leave my head, so I started sketching.

I wanted many domains, not one. I wanted real multi-language content, not auto-translated junk. I wanted clean URLs, good SEO hygiene, fast pages, and integrations that weren’t glued together by ten plugins fighting each other. And I wanted AI to do the boring part: reading the internet, spotting interesting topics, and drafting articles so I could focus on review and publishing.

The natural choice for me was Elixir and Phoenix. I liked the idea of a single backend, highly concurrent, sitting behind multiple domains without breaking a sweat. PostgreSQL would keep everything structured and indexed exactly the way I wanted. No plugins, no “maybe the theme implements this somehow.” Just schemas and code I control.

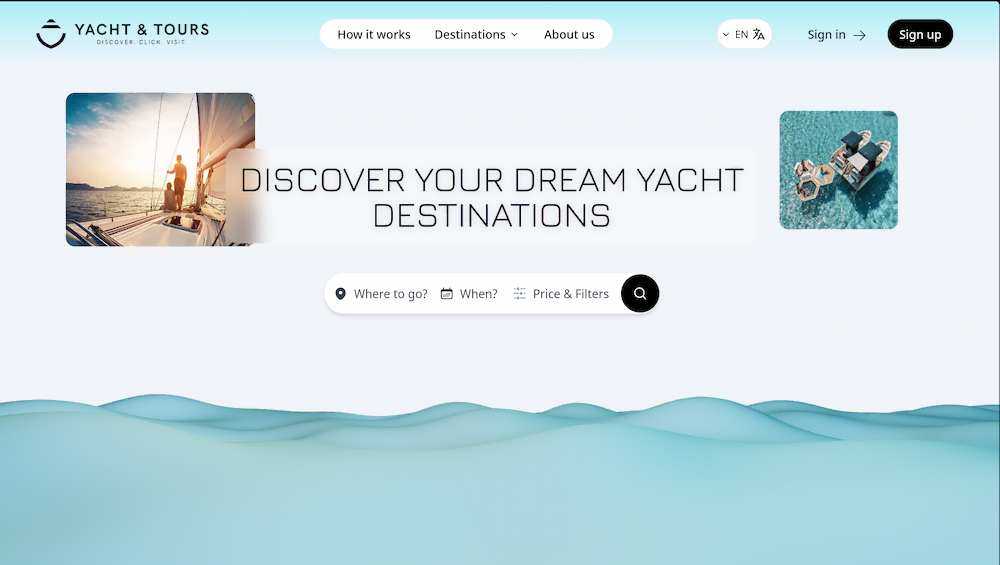

The core idea fell into place quickly: every website is its own tenant, and everything hangs off that. A Website record owns its domain, branding, SEO settings, hero section, supported languages, and its status. Nothing exists in the system without pointing back to a website. If you ask the app anything, the first step is always the same: look at the host, resolve the website, and only then do anything else.

Once that was set, the rest became a matter of discipline. Categories, tags, and articles all carry a website_id and a lang. If I’m serving elchemista.com in Italian, I know I’m working in a specific website and a specific language, and all queries are scoped that way. There’s no shared global category called “tech” that accidentally leaks across domains. Each site has its own tree of categories and tags, and each language has its own localized slugs.

The URL scheme looks simple on the outside:

https://{domain}/{lang}/{category_slug}/{article_slug}

Behind that simplicity there are a few non-negotiable rules. Articles are always looked up by website, language and slug only. The category segment in the URL is not used to find the article; it only exists to express the current canonical path. That means I can move an article from one category to another without breaking anything. If someone visits the old URL, I find the article by slug, compare the category in the path with the article’s current category, and if they don’t match I send a 301 to the correct URL. Old links keep working, search engines get a clean signal, and I don’t have to maintain a graveyard of manual redirects.

Multi-language is treated the same way: not as an optional plugin, but as a property of the data model. Each website has a list of supported languages. Each piece of content has a language code, always normalized to lowercase. For the same article concept, I have separate records per language, each with its own localized slug, SEO title, description, Open Graph data, and Twitter metadata. That lets me generate proper hreflang tags and per-language sitemaps, and it keeps Italian paths Italian, English paths English, and so on. There’s no magic translation layer pretending that one URL is “kind of” the same in four languages.

Once the content structure was solid, I turned to money and measurement. In my friend’s WordPress setup, analytics and monetization were afterthoughts. Tracking codes lived in random plugin fields, AdSense blocks were copy-pasted into widgets, and affiliate links were scattered inside the content. I wanted those things to be modeled, not sprayed around.

So each website can declare its own Google Analytics measurement ID. The platform knows whether to inject a default tracking snippet or a custom one. The same applies to AdSense: settings are per website, not global. If I want one domain to be heavy on ads and another to stay clean and editorial, I just change the configuration, not the templates.

Leads are handled as first-class records too. Contact forms are tied to websites and can store structured context: which article generated the lead, where it came from, what campaign it belongs to. Articles can have a dedicated affiliate link attached to them, with a reference code, company name, artwork, description and click counter. When an affiliate link changes, I update one record and move on. I don’t open ten posts to replace a URL manually.

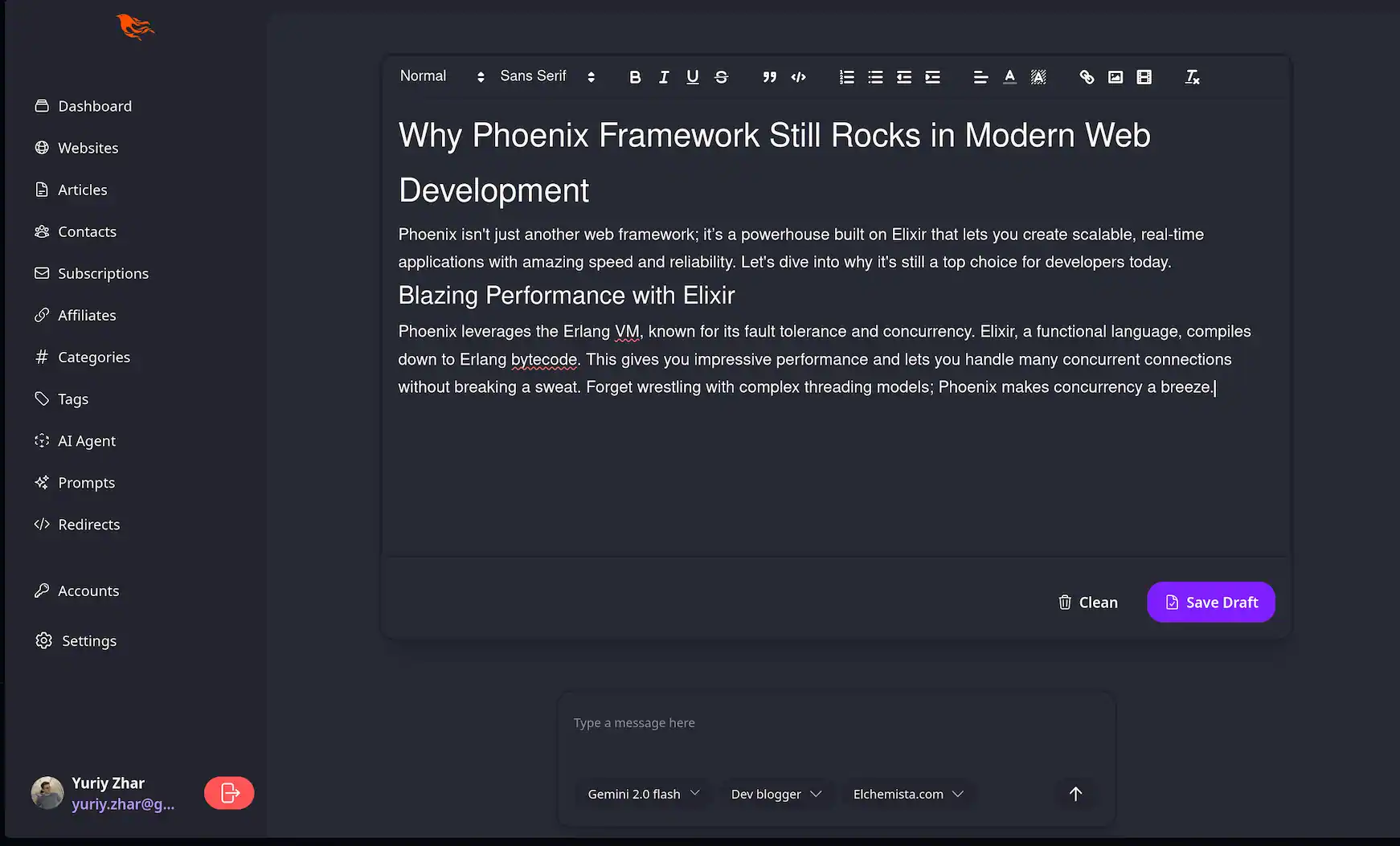

All of that would already be a decent platform for a human-only workflow. But I built this with AI in mind from the beginning.

The “agentic” part is a set of background processes that behave a bit like obsessive interns who never get tired. I point them at sources: RSS feeds, developer blogs, news outlets, product changelogs, social accounts. I give them prompts that describe the voice and depth I want: technical, opinionated, code examples allowed, no fluff, or more lightweight and beginner-friendly if the site needs that. I assign them websites and languages.

From there, they watch. When an agent finds something promising a new library, an interesting trend, a good story angle it doesn’t publish anything. It creates a draft article, already assigned to the right website and language. It proposes a title and a slug, writes a summary, fills in the main body in markdown, suggests a cover image, and even pre-fills SEO fields like meta title and description. If it recognizes a product that matches one of my affiliate programs, it can also suggest that relationship.

My job shifts from “write everything from scratch” to “review, correct, and hit publish.” I still control what goes live and how it sounds, but I don’t have to start every single piece from a blank page. The agents keep the queue of drafts warm across multiple domains and languages, without me needing to read the entire internet every morning.

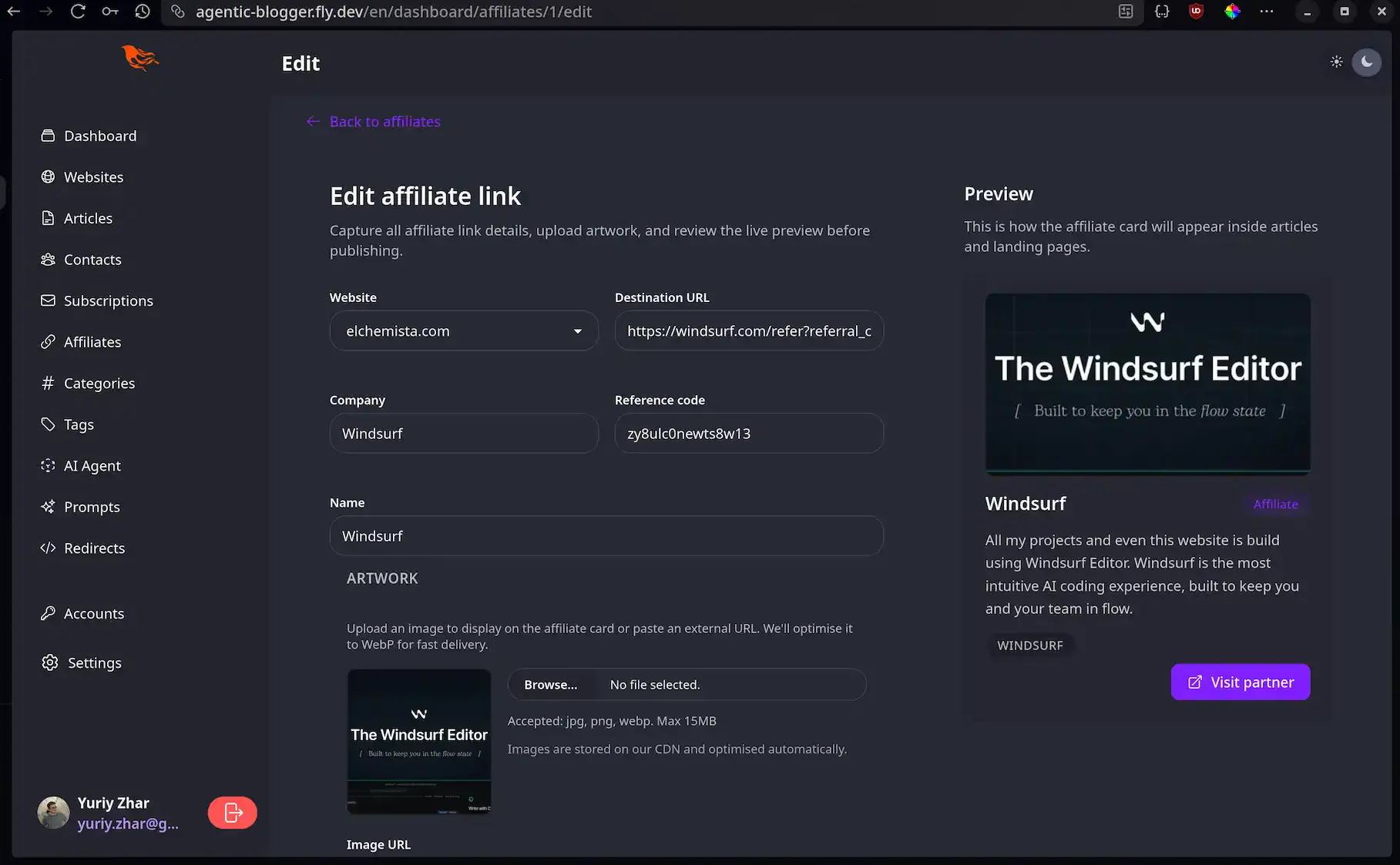

The admin you’d see if you logged in reflects this philosophy. It isn’t glamorous, but it’s tight: a section for websites where I tune domains, layouts, hero sections, supported languages, and integrations; a section for articles where metadata, content, SEO and connections like affiliates or contact forms live side by side; a space for affiliates with a live preview of how cards will look; an area where AI agents and prompts can be configured and adjusted over time. Everything is explicit. There’s no mystery plugin hiding logic in some unknown table.

In the end, I didn’t reinvent the idea my friend was using. I still believe in multiple niches, multiple domains, and many small, focused sites that capture leads. What I refused to accept was the infrastructure that usually comes with that strategy. Instead of a pile of cloned WordPress installations held together by plugins and luck, I now have a single, fast, Elixir-based platform that understands domains, languages, SEO and AI as first-class concepts.

Same business model. Completely different foundation.

Windsurf

All my projects and even this website is build using Windsurf Editor. Windsurf is the most intuitive AI coding experience, built to keep you and your team in flow.

Get in Touch

If you’d like a platform like this or a custom multi-domain, AI-powered blog tailored to your niche. Get in touch with me and we can build it for your project.