We’re all talking about AI, right? The smart assistants, the incredible images, the way large language models (LLMs) are reshaping everything from customer service to creative writing. But there's a quieter, often overlooked battle being fought behind the scenes, a high-stakes game that determines just how powerful and sustainable these AI wonders can become: the fight against heat.

Welcome to the AI Factory Floor: More Power, More Problems

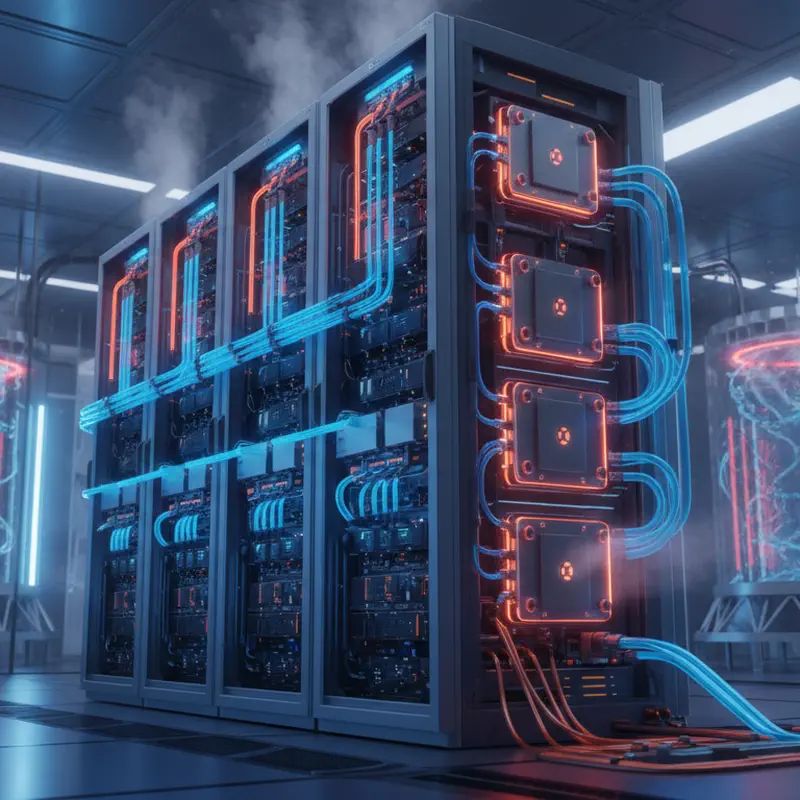

Think of an "AI factory" not as a place churning out robot parts, but as a massive, hyper-optimized data center. It's purpose-built, or heavily retrofitted, to train and run the AI models that are changing our world. These aren't your typical server farms, mind you. We're talking about racks upon racks of incredibly powerful, incredibly expensive, and incredibly hot hardware. Graphics Processing Units, or GPUs, are the muscle behind modern AI, and they run incredibly hot when performing their calculations.

The demand for this kind of computational muscle is skyrocketing. Every time an LLM gets better, every time a new AI breakthrough emerges, it’s because somewhere, a whole lot of GPUs have been working overtime. This means more power flowing in, more processing happening, and, you guessed it, a whole lot more heat being generated. It’s like trying to run a marathon in a sauna, for your expensive hardware. Not ideal.

Companies are pouring billions into building these facilities. Meta, Applied Digital, Microsoft, NVIDIA -they're all in a race to build the infrastructure that will power the next industrial revolution. But simply building bigger boxes isn't enough. The sheer density of computing power in these new "AI factories" creates entirely new challenges, bottlenecking progress if not addressed head-on.

The Heat is On: Why Air Conditioning Just Won't Cut It Anymore

For decades, data centers relied on good old-fashioned air conditioning. Blow cold air over the hot servers, suck out the hot air, repeat. Simple, right? Well, that works fine when your servers are spaced out and not consuming kilowatts of power per chip. But when you pack thousands of advanced GPUs into a small footprint, air simply isn't an efficient enough medium to transfer that much heat away.

The problem isn't just about keeping the equipment from melting. Excess heat degrades performance, shortens the lifespan of those incredibly expensive components, and can lead to catastrophic failures. Imagine a supercomputer slowing down because it’s perpetually thermal-throttled. Or an entire rack of GPUs failing prematurely. That’s not just a headache; it’s a multi-million-dollar disaster.

This is where the massive investment in cooling infrastructure really kicks in. It's no longer an afterthought, a separate HVAC system humming in the background. It’s becoming an integrated, fundamental part of the design, as critical as the power delivery itself. And it has to be, because the heat load from these new AI workloads is absolutely staggering. We're talking gigawatt-class facilities here, drawing power equivalent to small cities, and all that energy eventually converts to heat.

Ignoring advanced cooling in an AI factory is like bringing a garden hose to a skyscraper fire. It just won't work.

The Future is Liquid: A Refreshing Dip for Hot Hardware

So, if air isn’t cutting it, what's the solution? Liquid. That’s right, we’re moving away from air-cooled racks to systems that bring fluid directly to the hot spots. Think about your car engine. It uses liquid coolant to keep from overheating. Modern AI factories are adopting similar principles, but at a massive, industrialized scale.

Two main approaches are gaining traction. First, "direct-to-chip" cooling, where a liquid-filled cold plate sits directly on top of the GPU, drawing heat away much more efficiently than air ever could. It’s precise, targeted, and incredibly effective. Second, "immersion cooling," where entire servers or racks are submerged in a non-conductive dielectric fluid. It sounds wild, like something out of a sci-fi movie, but it offers unparalleled cooling capacity, allowing for incredibly dense computing clusters.

The benefits go beyond just keeping things cool. Liquid cooling can be significantly more energy-efficient than traditional air conditioning, reducing the massive operational costs associated with powering these facilities. It can also allow for heat recapture, where the waste heat is reused for other purposes, like heating buildings. It's a win-win: better performance for your AI, and a smaller environmental footprint. But building out this specialized infrastructure requires entirely new engineering, materials, and a hefty upfront investment.

The Cooling Investment: A Strategic Advantage, Not Just a Cost

Investing in advanced cooling for AI factories isn't just about throwing money at a problem; it's a strategic imperative. Companies like Johnson Controls and Legrand are now pouring millions into companies like Accelsius, scaling solutions specifically for these gigawatt-class AI facilities. This isn't just about making servers last longer, although that's a nice bonus.

This investment is about unlocking the true potential of AI. It means being able to run more powerful models, train them faster, and deploy them with greater reliability. It means pushing the boundaries of what AI can do, without hitting thermal limits. Those early adopters who embrace cutting-edge cooling will gain a significant competitive edge, allowing them to iterate faster and bring more innovative AI products to market.

Ultimately, the performance of our AI models is directly tied to the physical infrastructure beneath them. The next wave of AI innovation won't just be about smarter algorithms or better data; it will be built on the back of resilient, highly efficient, and expertly cooled hardware. So, while you might not see the liquid coolant flowing through a data center, rest assured, the future of AI depends on it.

WeShipFast

Hai un'idea o un prototipo su Lovable, Make o ChatGPT? Ti aiutiamo a concretizzare la tua visione in un MVP reale, scalabile e di proprietà.