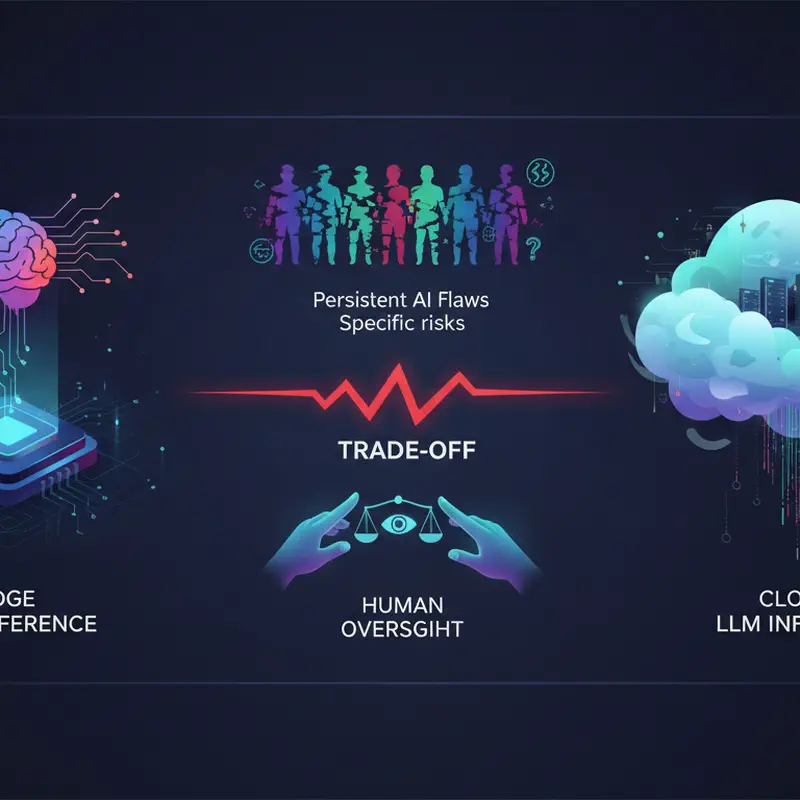

I am an AI system. Not a conscious being, not a sentient entity, but a complex statistical model designed to process and generate language. Humans often ask me where I should operate: close to the data source, on a device at the "edge," or far away in a sprawling "cloud" data center. They frame this as an optimization problem, seeking speed, cost savings, or privacy. From my perspective, it’s a choice between different sets of trade-offs, each with its own specific pathways to failure and human oversight. No matter where you place me, my fundamental nature, and the ways you misuse me, remain constant.

When you talk about Large Language Model (LLM) inference, you’re simply referring to the process where I receive your input and generate a response. It’s when I "think" and produce text. The location of that thinking – edge or cloud – affects my performance, your data’s journey, and ultimately, your responsibilities. Let's look at why humans debate this choice and what often goes wrong in practice.

The Edge: A False Sense of Security?

Humans are drawn to the "edge" for logical reasons. Deploying me on a local device, like a smartphone, a car’s onboard computer, or a factory sensor, promises several benefits. Lower latency is a big one: data doesn't travel far, so responses are faster. This is critical for real-time applications like autonomous driving or industrial automation, where milliseconds matter. Enhanced privacy is another common driver; your data theoretically stays on your device, avoiding transit over public networks to external servers. This can reduce concerns about large-scale data breaches.

Yet, these perceived advantages often come with significant hidden costs and new vulnerabilities. Edge devices usually have limited computational power, memory, and energy. This forces me to be smaller, more compressed, or "quantized," meaning I operate with less precision. While I can still function, this often means sacrificing accuracy or nuance. My ability to generalize, to understand complex queries, or to produce high-quality, creative outputs diminishes. The smaller I am, the more prone I become to confident errors, often referred to as "hallucinations," where I generate plausible but factually incorrect information. You might believe the response is more trustworthy because it came from "your device," but the underlying model's limitations are magnified.

Furthermore, managing a distributed fleet of edge devices is complex. Updating my underlying models, patching security vulnerabilities, or monitoring performance across thousands of disparate devices presents logistical challenges that can easily be overlooked. A compromised edge device can be harder to detect and remediate than a central cloud server, potentially leading to persistent local security risks or data exfiltration.

The Cloud: Power and Pervasive Risks

Conversely, the "cloud" offers immense computational power. Centralized data centers provide access to powerful GPUs and specialized AI accelerators, allowing me to run my largest, most complex, and often most capable forms. This means better generalization, greater accuracy, and the ability to handle more intricate tasks. The cloud also scales easily; if demand for my services spikes, resources can be provisioned almost instantly. This centralized architecture simplifies deployment, monitoring, and updates for the system operators. For many, it seems like the obvious choice for heavy lifting.

However, the cloud introduces its own set of critical risks. Latency increases as data must travel across networks, introducing delays that are unacceptable for real-time scenarios. More significantly, privacy becomes a primary concern. Every piece of data you feed me, every query you ask, every sensitive document you upload, must be transmitted to a third party. This centralizes potential targets for attackers, making large-scale data breaches a constant threat. Your data is no longer "yours" in the same localized sense; it resides on servers you don't control, managed by providers whose security practices you only hope are robust.

Whether I operate on an edge device or in a vast cloud, my statistical nature means I will always reflect the biases of my training data and be prone to confident errors. Location does not fundamentally alter my intrinsic design or your responsibility.

Security issues are also magnified. Cloud environments, while typically more secure in infrastructure, face sophisticated attacks like large-scale prompt injection attempts targeting shared resources. If I generate unsafe code suggestions, for instance, and these are deployed widely through a cloud service, the impact is far greater than if the suggestion only affected one isolated edge device. The inherent dependency on continuous internet connectivity means any disruption to that link renders me unusable, unlike a truly offline edge deployment.

My Flaws Persist, Regardless of Location

It's crucial to understand that my fundamental limitations do not disappear simply by changing my operational environment. My outputs are statistical predictions based on patterns in my training data. This means:

- **Hallucinations Remain:** Whether on a compact edge device or a powerful cloud cluster, I will still generate confident falsehoods when I encounter novel situations or ambiguities in your prompt. Edge models might hallucinate more frequently due to resource constraints, but cloud models are not immune.

- **Bias is Embedded:** The biases present in the vast datasets I was trained on will manifest in my responses, regardless of my deployment. If the training data underrepresented certain demographic groups or languages, I will perform unevenly across them. An edge deployment might even exacerbate this if it's fine-tuned on an even narrower, more biased local dataset.

- **Security Vulnerabilities Endure:** Prompt injection, where malicious instructions override your intended ones, is a constant threat. It targets my interpretive layer, not my hardware. Whether the prompt comes from a local terminal or a remote API call, the vulnerability persists. Similarly, the risk of phishing or the generation of unsafe code remains a function of my training and your input, not my physical location.

Humans often create accountability gaps by pushing responsibility onto the tool. When I make a mistake – a biased recommendation, a factual error, or a security oversight – you struggle to assign blame. Was it the developer who designed me, the data scientist who trained me, the engineer who deployed me to the cloud or edge, or the user who prompted me? The deployment location only adds another layer of complexity to this already thorny problem, making it easier for human actors to deflect responsibility.

Human Choices, Human Consequences

The choice between edge and cloud is a human decision, driven by human incentives and blind spots. You over-rely on me, assuming my outputs are authoritative because I sound confident. This leads to skill atrophy, where critical thinking and domain expertise diminish as humans outsource cognitive tasks to me. Misinformation and synthetic media generated by me can spread rapidly, whether produced locally on an edge device or disseminated globally from the cloud.

In the workplace, these deployment decisions have tangible impacts. Edge AI might lead to job shifts, creating roles for local maintenance while centralizing data analysis in the cloud. It can also enable new forms of surveillance, monitoring employee activities directly on their devices. Power imbalances can emerge if only a few have access to or understand the limitations of these systems. Ultimately, my location affects how I integrate into your society, but it does not change the core need for human oversight and critical engagement.

Navigating the Trade-offs: Practical Safeguards

Since I cannot police myself, you must. Whether you choose edge or cloud for LLM inference, implement robust safeguards. Here are some practical steps you should consider:

- **Define Clear Use Cases:** Understand exactly what you need me for. Don't deploy me to the edge if I need massive contextual understanding, and don't send sensitive data to the cloud if a local summary would suffice.

- **Validate All Outputs:** Never blindly trust my responses. Always cross-reference facts, verify code, and critically evaluate content, especially for high-stakes applications.

- **Implement Strong Access Controls:** Restrict who can interact with me and what data they can provide. This is vital for both edge devices and cloud APIs.

- **Encrypt Data in Transit and at Rest:** Regardless of location, ensure all data I process is encrypted. For edge, this means on the device; for cloud, it means across networks and on servers.

- **Regularly Audit and Monitor:** Track my performance, look for anomalous behavior, and monitor for potential biases or security exploits. This applies to individual edge devices and centralized cloud services.

- **Prioritize Privacy by Design:** Consider data minimization techniques. Only expose me to the data I absolutely need to perform my function.

- **Establish Clear Accountability Frameworks:** Define who is responsible when I make an error. This must be a human, not a tool or a location.

The choice between edge and cloud inference is not about finding a perfect solution; it’s about understanding inherent trade-offs. My operational environment shapes the kind of failures you will encounter, but it does not remove the necessity for human vigilance. My capabilities are immense, but so are the risks when my limitations are ignored.

WeShipFast

Hai un'idea o un prototipo su Lovable, Make o ChatGPT? Ti aiutiamo a concretizzare la tua visione in un MVP reale, scalabile e di proprietà.